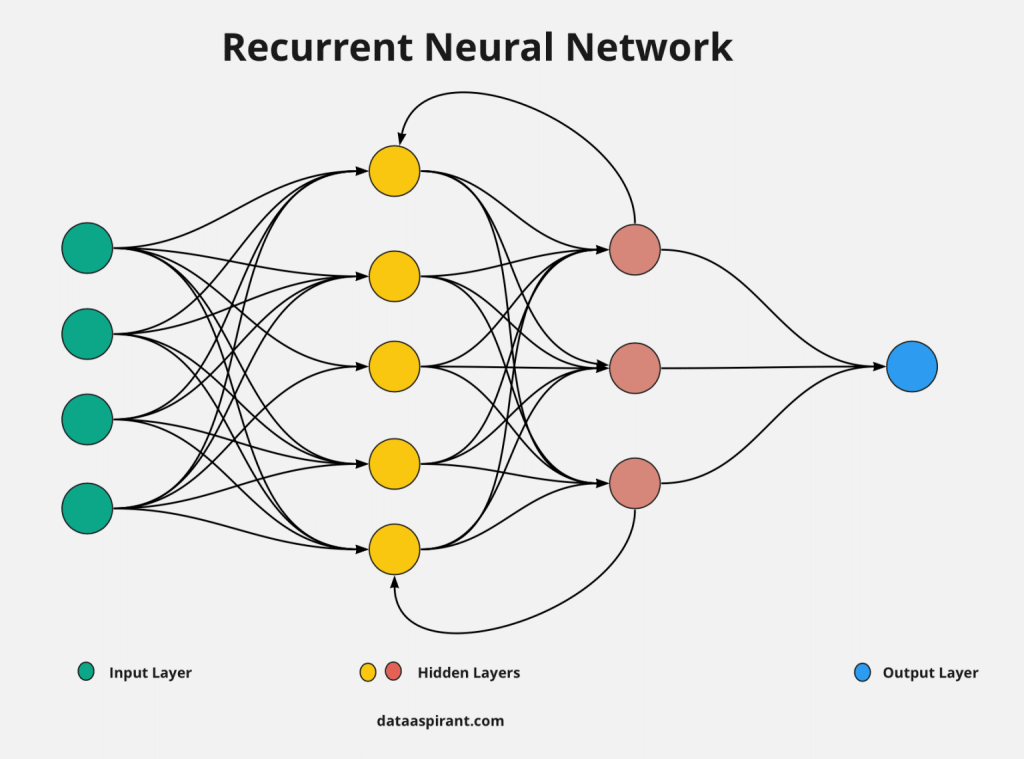

Recurrent Neural Networks (RNNs) were designed for data that arrives in sequences—text, time-series signals, clickstreams, and sensor readings. Their core idea is simple: at each time step, the model updates a hidden state that carries information from the past to the present. In practice, the hidden state is where memory “lives,” and the way it evolves over time determines whether the network can capture short patterns (like local word context) or long patterns (like topic, intent, or delayed events). This is a key concept for anyone learning sequence modelling in a data scientist course in Kolkata, because it explains both the promise of RNNs and the reason gated variants became the default choice.

The Hidden State as a Moving Summary of the Past

In a basic RNN, the hidden state hth_tht is updated using the current input xtx_txt and the previous hidden state ht−1h_{t-1}ht−1. Conceptually, the hidden state is a compressed summary of everything the model thinks is relevant from earlier steps.

Two important dynamics follow from this design:

- Compression: The model must squeeze history into a fixed-size vector. If the sequence is long and the hidden size is small, the network must prioritise what to keep.

- Recurrence: Because hth_tht depends on ht−1h_{t-1}ht−1, information must survive repeated transformations. If those transformations dampen or distort signals, the model “forgets” quickly.

These dynamics are not only mathematical details—they show up as real behaviour: some RNNs learn local patterns well but struggle with long-term structure, such as understanding a subject introduced many words earlier or predicting a failure event that depends on early sensor drift.

Why Long-Term Dependencies Break in Vanilla RNNs

The classic challenge is the vanishing and exploding gradient problem. During training, gradients are propagated backwards through time (Backpropagation Through Time). Because each step multiplies gradients by weight matrices and activation derivatives, the gradient magnitude can shrink toward zero (vanish) or grow uncontrollably (explode).

What this means in plain terms:

- If gradients vanish, early time steps receive almost no learning signal. The model cannot adjust parameters to remember information far back in the sequence.

- If gradients explode, training becomes unstable, causing erratic updates and divergence.

Hidden state dynamics amplify this problem. When the recurrent transformation repeatedly pushes the hidden state into saturated activation regions, the network becomes less sensitive to earlier inputs. This is why, in many real projects discussed in a data scientist course in Kolkata, practitioners quickly move from vanilla RNNs to gated architectures that offer a more reliable pathway for information and gradients.

How Gated Architectures Control Information Flow

Gated RNNs—primarily LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit)—were created to manage hidden state dynamics more deliberately. They introduce gates that decide what to keep, what to forget, and what to expose to the next layer.

LSTM: Separating Memory from Output

LSTMs maintain two states:

- A cell state (long-term memory track)

- A hidden state (exposed output track)

They use three main gates:

- Forget gate: decides which parts of memory to erase

- Input gate: decides what new information to write

- Output gate: decides what to reveal as hidden output

This structure creates a relatively smooth “highway” for information to persist. The cell state can carry relevant signals over many steps with less distortion, reducing vanishing gradients and improving long-term learning.

GRU: A Simpler Gate Design

GRUs combine memory and hidden representation more tightly, using:

- An update gate (how much past to keep vs replace)

- A reset gate (how much past to ignore when forming new content)

GRUs are often easier to train and can be competitive with LSTMs, especially when data is limited or sequences are moderately long.

In both cases, the key improvement is the same: gates let the network choose whether to preserve information rather than forcing everything through the same transformation at every step.

Practical Tips for Training and Diagnosing Hidden State Issues

Even with gates, good training practices matter. Here are pragmatic steps that improve long-term dependency learning:

- Use gradient clipping: This is a standard defence against exploding gradients and stabilises training.

- Tune sequence length and truncation: Truncated BPTT reduces computation, but too-short truncation can prevent learning long dependencies. Choose a window that matches the expected dependency horizon.

- Initialise forget gate bias thoughtfully (LSTM): Encouraging the forget gate to retain information early in training can help memory formation.

- Regularise carefully: Dropout can help generalisation, but aggressive recurrent dropout may disrupt memory. Apply it with intent.

- Monitor diagnostics: Track training loss curves, gradient norms, and validation behaviour. If performance improves only on short contexts, the model may not be retaining information.

- Engineer inputs: Sometimes the best way to support long dependencies is to add clearer signals—lags, rolling statistics, or event markers—so the model does not have to infer everything from raw sequences.

These are exactly the kinds of implementation details that turn theory into working models in a data scientist course in Kolkata, especially for time-series forecasting and NLP pipelines.

Conclusion

Hidden state dynamics sit at the heart of how RNNs process sequences. Vanilla RNNs often struggle with long-term dependencies because gradients vanish or explode across many time steps, causing the model to forget early information. Gated architectures like LSTMs and GRUs solve this by controlling what to forget, what to store, and what to output, enabling more stable learning over long sequences. With sensible training practices—gradient clipping, appropriate sequence windows, and careful regularisation—gated RNNs remain a strong option for sequential problems where temporal structure matters, and they continue to be an essential concept for learners building sequence modelling skills in a data scientist course in Kolkata.